GPT-2 and other tools now can generate texts. But so far, the results are not that... impressive. Let's talk about Neural Text Degeneration.

It’s incredible how much text information analysis and text generation can do these days. Just look at Open AI’s GPT-2 model that can handle most text-related tasks on the state-of-the-art level. However, generating text with neural networks still entails a number of problems making the resulting text look unnatural.

Let’s talk about a few of these problems that were highlighted and solved in the articles called The Curious Case of Neural Text Degeneration [1] and Neural Text Degeneration with Unlikelihood Training [2] published in 2019. You’ve probably noticed that in those articles the crooked text generation is called degeneration. Well, that’s a clever name — and that’s exactly what we’ll call it.

Open-Ended Generation - How It Works

Let’s consider the so-called open-ended generation: there is a certain input text passage (often referred to as context) and the algorithm’s task (nowadays, it is almost always a neural network) is to continue it. Unlike non-open-ended generation, where the continuation has to meet additional requirements (e. g. to translate or summarize the text or to answer a question), open-ended generation only needs the algorithm to finish the story. For instance, you might need to continue the sentence:

Humpty Dumpty sat on a wall, Humpty Dumpty had a great…

One way to go on with this sentence could be:

…time sitting on that wall reminiscing about the good old days.

Problems with Open-Ended Generation

If you look at how the modern algorithms deal with this task, you’d come across the following problems:

First off, a few words about what decoding is. When we generate text word by word, there are always many options to generate the next one. The language model assigns probabilities to these options. In the example above, the probabilities would be:

fall — 0.3

day — 0.1

chance — 0.05

time — 0.02

deal — 0.01…

The goal here is to make the whole sentence look as if it exists with a high degree of likelihood. In mathematics, there is even a function called likelihood (it is equal to the joint probability distribution of words), which in this case is optimized sequentially.

1. Greedy search problems

One of the greedy strategies for generating the next word is to choose the most likely one, greedy search. The downside to this strategy is that it can’t look into the future: it will select words with high likelihood several times, but this may result in a text which is difficult to continue — all the subsequent words will have a very small likelihood.

2. Beam search degenerate text

Alternatively, the beam search strategy is tracking the b of the most likely options while generating the text. The idea is following: if the most likely option is difficult to continue and it cease to be the most likely because of that, the algorithm would still have the b-1 option in stock.

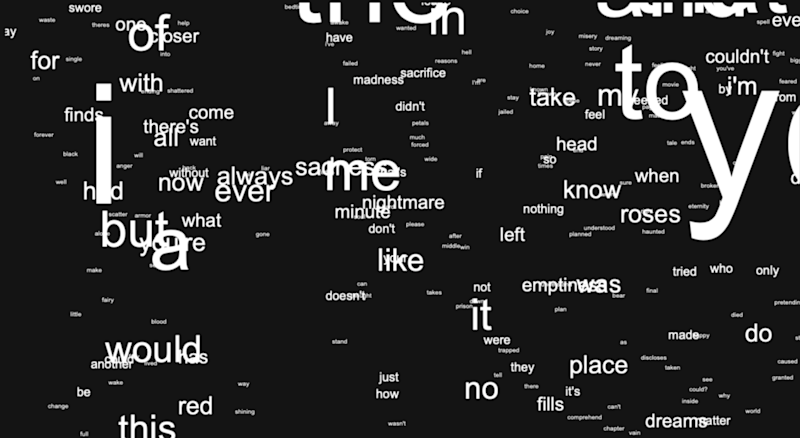

![Figure 1 from [1]: the continuation generated by the beam search strategy (from GPT-2)](http://images.ctfassets.net/pzhspng2mvip/1D09JrQMSnfDYUV4G6eISZ/fff8e7377fb0be26887a73644ff0b6f1/Figure_1_from_-1--_the_continuation_generated_by_the_beam_search_strategy__from_GPT-2__.png)

As shown in Figure 1, even when generated from the state-of-the-art GPT-2, beam search still leads to degenerate text.

Why greedy and beam search don’t work very well

Human speech is very complex and multifaceted, and that’s why text generation still struggles to sound completely natural. Any human text can boast much more significant distributional variance than a machine-generated one. Figure 2 shows that the natural distribution of human text exhibits considerable fluctuations in word probability while the distribution of beam search-generated text leads to unnaturally flat probability. By the way, machine texts have 40% fewer unique words (i.e., occurring only once) than human texts [2].

![Figure 2 from [1]: word probabilities in machine and natural texts](http://images.ctfassets.net/pzhspng2mvip/47TADWCHoCGHFOK1FTyfhb/0584767449e9c163e0856cfa91e68311/Figure_2_from_-1--_word_probabilities_in_machine_and_natural_texts_.png)

Figure 2 from [1]: word probabilities in machine and natural texts

Some think [2] that it is the use of likelihood that causes the texts to come out unnatural. In those texts, high-frequency words are more common than necessary, while low-frequency words are much less common.

Another idea is that modern neural network architectures (specifically, the so-called transformer, which is the basis for the GPT-2 model) are not suitable for open-ended text generation. Example: in generated text, a word is found among 128 previous words on average in 63% of cases, and in human speech — only in 43%.

Finally, all modern language models are fitted with large text corpora that do not take into account the specifics of the tasks for which they will be used.

Strategies for Better Next Word Prediction

There are two approaches that can provide a better solution to text degeneration, including:

1. Top-k sampling & Nucleus (Top-p) sampling in a stochastic decoding scheme

One approach [1] is to use a stochastic decoding scheme. Instead of generating the most likely word, you can choose the next word randomly with the probabilities that have been calculated for the candidate words. But that doesn’t necessarily mean that the most likely word will be generated. In the example above, it will happen only in 30% of cases. There is, however, a risk of generating a completely irrelevant word to which a negligible but not zero probability will be attributed. For that reason, the following strategies are used:

Top-k Sampling is a method that samples the next word from the top kmost probable choices. In the example above, employing Top-2 Sampling allows choosing between fall and day. The fall option will be attributed 0.3 / (0.3 + 0.1) = 0.75 probability. In this case, you have to recalculate the probabilities.

Nucleus (Top-p) Sampling suggests selecting the highest probability tokens whose cumulative probability weight exceeds the pre-chosen threshold p. For instance, Top-0.41 will allow you to choose between the three words: fall, day, and chance, since

0.3 + 0.1 < 0.41,

0.3 + 0.1 + 0.05 >= 0.41

Figure 3 shows the use of different decoding strategies:

![Figure 3 from [1]: text generation using different decoding strategies](http://images.ctfassets.net/pzhspng2mvip/30wkUmFOGtNU8FJMcxeuLT/4bd4204567fb4bc67b09a2163bbbd380/Figure_3_from_-1--_text_generation_using_different_decoding_strategies_.png)

2. Regularization

Another approach [2] to dealing with unnatural generated texts is to change the objective. You can enhance the likelihood function with components that penalize for repeating words and sequences of words. In machine learning, adding components to the objective to make the solution better is called regularization. This is a standard strategy. Figure 4 shows what happens if you use such regularization. Here, MLE is the objective, and UL-token+seq is penalization for repeating words and sequences of words.

![Figure 4 from [2]: examples of text generation with the objective and with penalizing repetitions](http://images.ctfassets.net/pzhspng2mvip/cgFSGXi3pvCRFtmm4PwmS/25e5373ce010fda6d3c981325e501ff3/Figure_4_from_-2--_examples_of_text_generation_with_the_objective_and_with_penalizing_repetitions.png)

![Figure 4 from [2]: examples of text generation with the objective and with penalizing repetitions](http://images.ctfassets.net/pzhspng2mvip/7yU0qJnxtfnSBnyncCfci9/2ebb83ac47d5fc3ebbede20430c77560/Figure_4_from_-2--_examples_of_text_generation_with_the_objective_and_with_penalizing_repetitions__2_.png)

By the way, the authors of [2] did an interesting study: they showed test subjects the beginning of a text (the context) and two continuations, and the subjects were to decide which continuation seemed more natural to them. The model with regularization was better than the standard model in 84% of cases; moreover, it was better than the human text in 48% of cases, which means the test subjects confused the machine-generated text with human speech.

Along with the dizzying success that neural text generation is now gaining, it is also struggling through growing pains, as is the case with any booming field of research. Our ML Research department, that works with such technologies to create artificial intelligence, has been very excited to learn what text degeneration is all about.

We believe that this problem could be solved in the foreseeable future. It may very well be tackled with the above strategies. And then, well… Perhaps, the sequel to this article will be written entirely by a neural network. You never know. Applying Neural text-to-speech (NTTS) models to the predicted text may also lead to exciting applications.

[1] Ari Holtzman, Jan Buys, Maxwell Forbes, Yejin Choi « Curious Case of Neural Text Degeneration» // https://arxiv.org/abs/1904.09751

[2] Sean Welleck, Ilia Kulikov, Stephen Roller, Emily Dinan, Kyunghyun Cho, Jason Weston «Neural Text Generation with Unlikelihood Training» https://arxiv.org/abs/1908.04319